Building a Basic RAG Agent using GoodMem

What you’ll build

Retrieval-augmented generation (RAG) injects knowledge from external sources (for example, documents) into an LLM—no retraining required—so it can answer questions or make decisions it otherwise couldn't. That knowledge is hot-swappable: add, remove, or replace sources to update what the LLM should know.

GoodMem is the memory layer between your agent and its data, providing persistent, searchable context. It manages embedders, LLMs, and rerankers as first-class resources you wire together at query time, and it can be extended with advanced optimization via GoodMem Cloud Tuner (private beta).

In this tutorial, you'll see how quickly you can build a RAG agent in GoodMem.

Prerequisites

-

Get an OpenAI API key. Then set the environment variable:

export OPENAI_API_KEY="your_openai_api_key" -

Install GoodMem:

curl -s https://get.goodmem.ai | bash -s -- --handsfree \ --db-password "your-secure-password-min-14-chars" \ --tls-disabledThe installation script will print the GoodMem REST API endpoint and a GoodMem API key. Write them down; we'll use them throughout this tutorial. For convenience, export them to your shell environment:

export GOODMEM_BASE_URL="your_goodmem_base_url" export GOODMEM_API_KEY="your_goodmem_api_key"Note that

GOODMEM_BASE_URLshould not contain the/v1suffix. -

Install the SDK based on how you'll interface with GoodMem.

Nothing to do; the GoodMem CLI is installed as part of the GoodMem installation. Skip this section. If your server isn't running on the default gRPC endpoint (

https://localhost:9090), add--serverto the CLI commands (the CLI does not useGOODMEM_BASE_URL).Nothing to do; cURL is standard on most Linux distributions. Skip this section.

pip install goodmem-client requestsThen set up your Python environment with the required imports and variables:

import requests import json import os import base64 # Set up variables (instead of environment variables) GOODMEM_BASE_URL = os.getenv("GOODMEM_BASE_URL") GOODMEM_API_KEY = os.getenv("GOODMEM_API_KEY") OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")GOPROXY="https://go-proxy.fury.io/weisi/" \ GOPRIVATE="github.com/PAIR-Systems-Inc/goodmem" \ GOSUMDB=off \ go get github.com/PAIR-Systems-Inc/goodmem/clients/goCreate a

main.gofile with the required imports and client configuration:package main import ( "context" "encoding/base64" "encoding/json" "fmt" "log" "net/url" "os" "regexp" "strconv" "strings" "unicode/utf8" goodmem "github.com/PAIR-Systems-Inc/goodmem/clients/go" ) // jsonUnescapeUnicode replaces JSON \uXXXX escapes with UTF-8 so printouts show real characters. func jsonUnescapeUnicode(b []byte) []byte { re := regexp.MustCompile(`\\u([0-9a-fA-F]{4})`) return re.ReplaceAllFunc(b, func(m []byte) []byte { code, _ := strconv.ParseInt(string(m[2:]), 16, 32) var buf [utf8.UTFMax]byte n := utf8.EncodeRune(buf[:], rune(code)) return buf[:n] }) } func main() { // Set up variables goodmemBaseURL := os.Getenv("GOODMEM_BASE_URL") goodmemAPIKey := os.Getenv("GOODMEM_API_KEY") openAIAPIKey := os.Getenv("OPENAI_API_KEY") // Create client baseURL := strings.TrimRight(goodmemBaseURL, "/") cfg := goodmem.NewConfiguration() cfg.Servers = goodmem.ServerConfigurations{ {URL: baseURL}, } // Streaming client (e.g. RetrieveMemoryStreamAdvanced) requires Scheme and Host u, err := url.Parse(baseURL) if err != nil { log.Fatal("Invalid GOODMEM_BASE_URL: ", err) } cfg.Scheme = u.Scheme cfg.Host = u.Host cfg.AddDefaultHeader("x-api-key", goodmemAPIKey) client := goodmem.NewAPIClient(cfg) streamClient := goodmem.NewStreamingClient(client) ctx := context.Background()The remaining Go snippets in this tutorial continue inside the

main()function shown above.Add the GoodMem client to your

build.gradle:repositories { mavenCentral() mavenLocal() // if the client is published to your local Maven repo } dependencies { implementation "ai.pairsys.goodmem:goodmem-client-java:latest.release" implementation "com.google.code.gson:gson:2.10.1" }Create

src/main/java/Main.javawith the required imports and client configuration:import ai.pairsys.goodmem.client.ApiClient; import ai.pairsys.goodmem.client.ApiException; import ai.pairsys.goodmem.client.StreamingClient; import ai.pairsys.goodmem.client.StreamingClient.*; import ai.pairsys.goodmem.client.api.*; import ai.pairsys.goodmem.client.model.*; import com.google.gson.Gson; import com.google.gson.GsonBuilder; import java.util.regex.Matcher; import java.util.regex.Pattern; public class Main { private static final Gson GSON = new GsonBuilder().disableHtmlEscaping().setPrettyPrinting().create(); // Replaces JSON \uXXXX escapes with UTF-8 so printouts show real characters private static String jsonUnescapeUnicode(String json) { Matcher m = Pattern.compile("\\\\u([0-9a-fA-F]{4})").matcher(json); StringBuffer sb = new StringBuffer(); while (m.find()) { String repl = new String(Character.toChars(Integer.parseInt(m.group(1), 16))); m.appendReplacement(sb, Matcher.quoteReplacement(repl)); } m.appendTail(sb); return sb.toString(); } public static void main(String[] args) throws ApiException, java.io.IOException, ai.pairsys.goodmem.client.StreamingClient.StreamError { // Set up variables from environment String goodmemBaseUrl = System.getenv("GOODMEM_BASE_URL"); String goodmemApiKey = System.getenv("GOODMEM_API_KEY"); String openAIApiKey = System.getenv("OPENAI_API_KEY"); // Create client ApiClient apiClient = new ApiClient(); apiClient.setBasePath(goodmemBaseUrl.replaceAll("/$", "")); ai.pairsys.goodmem.client.auth.ApiKeyAuth auth = (ai.pairsys.goodmem.client.auth.ApiKeyAuth) apiClient.getAuthentication("ApiKeyAuth"); auth.setApiKey(goodmemApiKey); EmbeddersApi embeddersApi = new EmbeddersApi(apiClient); LlmsApi llmsApi = new LlmsApi(apiClient); SpacesApi spacesApi = new SpacesApi(apiClient); MemoriesApi memoriesApi = new MemoriesApi(apiClient); RerankersApi rerankersApi = new RerankersApi(apiClient); StreamingClient streamClient = new StreamingClient(apiClient); } }The remaining Java snippets in this tutorial continue inside the

mainmethod (or use the sameapiClientand API instances in your own flow).npm install @pairsystems/goodmem-clientThen set up your Node.js script with the required imports and client configuration:

const GoodMemClient = require('@pairsystems/goodmem-client'); const { StreamingClient } = GoodMemClient; // Set up variables from environment const GOODMEM_BASE_URL = (process.env.GOODMEM_BASE_URL || '').replace(/\/$/, ''); const GOODMEM_API_KEY = process.env.GOODMEM_API_KEY; const OPENAI_API_KEY = process.env.OPENAI_API_KEY; // Configure the API client const defaultClient = GoodMemClient.ApiClient.instance; defaultClient.basePath = GOODMEM_BASE_URL; defaultClient.defaultHeaders = { 'X-API-Key': GOODMEM_API_KEY, 'Content-Type': 'application/json', 'Accept': 'application/json' }; const embeddersApi = new GoodMemClient.EmbeddersApi(); const llmsApi = new GoodMemClient.LLMsApi(); const spacesApi = new GoodMemClient.SpacesApi(); const memoriesApi = new GoodMemClient.MemoriesApi(); const rerankersApi = new GoodMemClient.RerankersApi(); const streamingClient = new StreamingClient(defaultClient);The remaining JavaScript snippets in this tutorial assume the above client and API instances are in scope (e.g., same file or module).

Create a

BasicRag.csprojfile:<Project Sdk="Microsoft.NET.Sdk"> <PropertyGroup> <OutputType>Exe</OutputType> <TargetFramework>net8.0</TargetFramework> </PropertyGroup> <ItemGroup> <PackageReference Include="Pairsystems.Goodmem.Client" Version="*" /> </ItemGroup> </Project>Create

Program.cswith the required usings and client configuration:using Pairsystems.Goodmem.Client; using Pairsystems.Goodmem.Client.Api; using Pairsystems.Goodmem.Client.Client; using Pairsystems.Goodmem.Client.Model; // Set up variables from environment var goodmemBaseUrl = (Environment.GetEnvironmentVariable("GOODMEM_BASE_URL") ?? "").TrimEnd('/'); var goodmemApiKey = Environment.GetEnvironmentVariable("GOODMEM_API_KEY"); var openAIApiKey = Environment.GetEnvironmentVariable("OPENAI_API_KEY"); // Create client (pass Configuration to each API and StreamingClient) var config = new Configuration { BasePath = goodmemBaseUrl, DefaultHeaders = new Dictionary<string, string> { ["x-api-key"] = goodmemApiKey ?? "" } }; var embeddersApi = new EmbeddersApi(config); var llmsApi = new LLMsApi(config); var spacesApi = new SpacesApi(config); var memoriesApi = new MemoriesApi(config); var rerankersApi = new RerankersApi(config); var streamingClient = new StreamingClient(config);The remaining .NET snippets in this tutorial assume the above client and API instances are in scope.

About the code examples: The snippets below show the happy path for learning purposes. In production, you should make the code more robust by adding error handling, etc.

Step 1: Register your embedder and LLM

At minimum, a RAG agent needs two kinds of neural network models to process information:

- an embedder, which converts information (for example, text, images, or audio) into a numerical vector (an embedding) for fast retrieval, and

- an LLM, which combines retrieved information with the user query to generate the final answer.

The goal of this step is to bring the RAG agent to the following state:

┌──────────────────────────────────────┐

│ RAG Agent (under construction) │

│ ┌──────────┐ ┌─────┐ │

│ │ Embedder │ │ LLM │ │

│ └──────────┘ └─────┘ │

└──────────────────────────────────────┘Here we use OpenAI's text-embedding-3-large embedder and gpt-5.1 LLM for simplicity.

Step 1.1: Register the embedder

The commands below register the OpenAI text-embedding-3-large embedder in GoodMem and environment variable $EMBEDDER_ID for later use.

As you'll see later, giving every component an ID is a GoodMem feature that makes building and managing RAG agents easier and enables automated tuning.

In the sample outputs below, IDs are shown as [EMBEDDER_UUID]-style placeholders; your CLI will print different values unless you explicitly pass --id (see Client-Specified Identifiers).

goodmem embedder create \

--display-name "OpenAI test" \

--provider-type OPENAI \

--endpoint-url "https://api.openai.com/v1" \

--model-identifier "text-embedding-3-large" \

--dimensionality 1536 \

--cred-api-key ${OPENAI_API_KEY} | tee /tmp/embedder_output.txt

export EMBEDDER_ID=$(grep "^ID:" /tmp/embedder_output.txt | awk '{print $2}')

echo "Embedder ID: $EMBEDDER_ID"curl -X POST "$GOODMEM_BASE_URL/v1/embedders" \

-H "x-api-key: $GOODMEM_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"displayName": "OpenAI test",

"providerType": "OPENAI",

"endpointUrl": "https://api.openai.com/v1",

"modelIdentifier": "text-embedding-3-large",

"credentials": {

"kind": "CREDENTIAL_KIND_API_KEY",

"apiKey": {

"inlineSecret": "'"$OPENAI_API_KEY"'"

}

},

"dimensionality": 1536,

"distributionType": "DENSE"

}' | tee /tmp/embedder_response.json | jq

export EMBEDDER_ID=$(jq -r '.embedderId' /tmp/embedder_response.json)

echo "Embedder ID: $EMBEDDER_ID"response = requests.post(

f"{GOODMEM_BASE_URL}/v1/embedders",

headers={

"x-api-key": GOODMEM_API_KEY,

"Content-Type": "application/json"

},

json={

"displayName": "OpenAI test",

"providerType": "OPENAI",

"endpointUrl": "https://api.openai.com/v1",

"modelIdentifier": "text-embedding-3-large",

"credentials": {

"kind": "CREDENTIAL_KIND_API_KEY",

"apiKey": {

"inlineSecret": OPENAI_API_KEY

}

},

"dimensionality": 1536,

"distributionType": "DENSE"

}

)

response.raise_for_status()

embedder_response = response.json()

EMBEDDER_ID = embedder_response["embedderId"]

print(f"Embedder ID: {EMBEDDER_ID}")apiKeyAuth := goodmem.NewApiKeyAuth()

apiKeyAuth.SetInlineSecret(openAIAPIKey)

creds := goodmem.NewEndpointAuthentication(goodmem.CREDENTIAL_KIND_API_KEY)

creds.SetApiKey(*apiKeyAuth)

embedderReq := goodmem.NewEmbedderCreationRequest(

"OpenAI test",

goodmem.PROVIDER_OPENAI,

"https://api.openai.com/v1",

"text-embedding-3-large",

1536,

goodmem.DENSE,

)

embedderReq.SetCredentials(*creds)

embedderResp, _, err := client.EmbeddersAPI.CreateEmbedder(ctx).

EmbedderCreationRequest(*embedderReq).Execute()

if err != nil {

log.Fatal(err)

}

embedderID := embedderResp.GetEmbedderId()

fmt.Printf("Embedder ID: %s\n", embedderID)ai.pairsys.goodmem.client.model.ApiKeyAuth openaiKeyAuth = new ai.pairsys.goodmem.client.model.ApiKeyAuth();

openaiKeyAuth.setInlineSecret(openAIApiKey);

EndpointAuthentication credentials = new EndpointAuthentication();

credentials.setKind(CredentialKind.CREDENTIAL_KIND_API_KEY);

credentials.setApiKey(openaiKeyAuth);

EmbedderCreationRequest embedderReq = new EmbedderCreationRequest();

embedderReq.setDisplayName("OpenAI test");

embedderReq.setProviderType(ProviderType.OPENAI);

embedderReq.setEndpointUrl("https://api.openai.com/v1");

embedderReq.setModelIdentifier("text-embedding-3-large");

embedderReq.setDimensionality(1536);

embedderReq.setDistributionType(DistributionType.DENSE);

embedderReq.setCredentials(credentials);

EmbedderResponse embedderResp = embeddersApi.createEmbedder(embedderReq);

String embedderID = embedderResp.getEmbedderId();

System.out.println("Embedder ID: " + embedderID);const embedderReq = {

displayName: 'OpenAI test',

providerType: 'OPENAI',

endpointUrl: 'https://api.openai.com/v1',

modelIdentifier: 'text-embedding-3-large',

dimensionality: 1536,

distributionType: 'DENSE',

credentials: {

kind: 'CREDENTIAL_KIND_API_KEY',

apiKey: { inlineSecret: OPENAI_API_KEY }

}

};

const embedderResp = await embeddersApi.createEmbedder(embedderReq);

const EMBEDDER_ID = embedderResp.embedderId;

console.log('Embedder ID:', EMBEDDER_ID);var credApiKey = new ApiKeyAuth { InlineSecret = openAIApiKey };

var creds = new EndpointAuthentication

{

Kind = CredentialKind.CREDENTIALKINDAPIKEY,

ApiKey = credApiKey

};

var embedderReq = new EmbedderCreationRequest(

displayName: "OpenAI test",

description: null,

providerType: ProviderType.OPENAI,

endpointUrl: "https://api.openai.com/v1",

apiPath: null,

modelIdentifier: "text-embedding-3-large",

dimensionality: 1536,

distributionType: DistributionType.DENSE,

maxSequenceLength: null,

supportedModalities: null,

credentials: creds,

labels: null,

varVersion: null,

monitoringEndpoint: null,

ownerId: null,

embedderId: null);

var embedderResp = embeddersApi.CreateEmbedder(embedderReq);

var embedderID = embedderResp.EmbedderId;

Console.WriteLine($"Embedder ID: {embedderID}");The cURL commands above shall produce the content as follows in the print out:

Embedder created successfully!

ID: [EMBEDDER_UUID]

Display Name: OpenAI test

Owner: [OWNER_UUID]

Provider Type: OPENAI

Distribution: DENSE

Endpoint URL: https://api.openai.com/v1

API Path: /embeddings

Model: text-embedding-3-large

Dimensionality: 1536

Created by: [CREATED_BY_UUID]

Created at: 2026-01-11T21:47:30ZEmbedder ID: [EMBEDDER_UUID]Embedder ID: [EMBEDDER_UUID]Embedder ID: [EMBEDDER_UUID]Embedder ID: [EMBEDDER_UUID]Embedder ID: [EMBEDDER_UUID]Embedder ID: [EMBEDDER_UUID]Step 1.2: Register the LLM

The commands below register OpenAI's gpt-5.1 LLM in GoodMem and save the LLM ID as the

environment variable $LLM_ID for later use.

goodmem llm create \

--display-name "My GPT-5.1" \

--provider-type OPENAI \

--endpoint-url "https://api.openai.com/v1" \

--model-identifier "gpt-5.1" \

--cred-api-key ${OPENAI_API_KEY} \

--supports-chat | tee /tmp/llm_output.txt

export LLM_ID=$(grep "^ID:" /tmp/llm_output.txt | awk '{print $2}')

echo "LLM ID: $LLM_ID"curl -X POST "${GOODMEM_BASE_URL}/v1/llms" \

-H "Content-Type: application/json" \

-H "x-api-key: ${GOODMEM_API_KEY}" \

-d '{

"displayName": "My GPT-5.1",

"providerType": "OPENAI",

"endpointUrl": "https://api.openai.com/v1",

"modelIdentifier": "gpt-5.1",

"credentials": {

"kind": "CREDENTIAL_KIND_API_KEY",

"apiKey": {

"inlineSecret": "'"${OPENAI_API_KEY}"'"

}

}

}' | tee /tmp/llm_response.json | jq

export LLM_ID=$(jq -r '.llm.llmId' /tmp/llm_response.json)

echo "LLM ID: $LLM_ID"# Register the LLM

response = requests.post(

f"{GOODMEM_BASE_URL}/v1/llms",

headers={

"Content-Type": "application/json",

"x-api-key": GOODMEM_API_KEY

},

json={

"displayName": "My GPT-5.1",

"providerType": "OPENAI",

"endpointUrl": "https://api.openai.com/v1",

"modelIdentifier": "gpt-5.1",

"credentials": {

"kind": "CREDENTIAL_KIND_API_KEY",

"apiKey": {

"inlineSecret": OPENAI_API_KEY

}

}

}

)

response.raise_for_status()

llm_response = response.json()

LLM_ID = llm_response["llm"]["llmId"]

print(f"LLM ID: {LLM_ID}")llmKeyAuth := goodmem.NewApiKeyAuth()

llmKeyAuth.SetInlineSecret(openAIAPIKey)

llmCreds := goodmem.NewEndpointAuthentication(goodmem.CREDENTIAL_KIND_API_KEY)

llmCreds.SetApiKey(*llmKeyAuth)

llmReq := goodmem.NewLLMCreationRequest(

"My GPT-5.1",

goodmem.OPENAI,

"https://api.openai.com/v1",

"gpt-5.1",

)

llmReq.SetCredentials(*llmCreds)

llmResp, _, err := client.LLMsAPI.CreateLLM(ctx).

LLMCreationRequest(*llmReq).Execute()

if err != nil {

log.Fatal(err)

}

llmID := llmResp.Llm.GetLlmId()

fmt.Printf("LLM ID: %s\n", llmID)ai.pairsys.goodmem.client.model.ApiKeyAuth llmKeyAuth = new ai.pairsys.goodmem.client.model.ApiKeyAuth();

llmKeyAuth.setInlineSecret(openAIApiKey);

EndpointAuthentication llmCreds = new EndpointAuthentication();

llmCreds.setKind(CredentialKind.CREDENTIAL_KIND_API_KEY);

llmCreds.setApiKey(llmKeyAuth);

LLMCreationRequest llmReq = new LLMCreationRequest();

llmReq.setDisplayName("My GPT-5.1");

llmReq.setProviderType(LLMProviderType.OPENAI);

llmReq.setEndpointUrl("https://api.openai.com/v1");

llmReq.setModelIdentifier("gpt-5.1");

llmReq.setCredentials(llmCreds);

CreateLLMResponse llmResp = llmsApi.createLLM(llmReq);

String llmID = llmResp.getLlm().getLlmId();

System.out.println("LLM ID: " + llmID);const llmReq = {

displayName: 'My GPT-5.1',

providerType: 'OPENAI',

endpointUrl: 'https://api.openai.com/v1',

modelIdentifier: 'gpt-5.1',

credentials: {

kind: 'CREDENTIAL_KIND_API_KEY',

apiKey: { inlineSecret: OPENAI_API_KEY }

}

};

const llmResp = await llmsApi.createLLM(llmReq);

const LLM_ID = llmResp.llm.llmId;

console.log('LLM ID:', LLM_ID);var llmKeyAuth = new ApiKeyAuth { InlineSecret = openAIApiKey };

var llmCreds = new EndpointAuthentication

{

Kind = CredentialKind.CREDENTIALKINDAPIKEY,

ApiKey = llmKeyAuth

};

var llmReq = new LLMCreationRequest(

displayName: "My GPT-5.1",

description: null,

providerType: LLMProviderType.OPENAI,

endpointUrl: "https://api.openai.com/v1",

apiPath: null,

modelIdentifier: "gpt-5.1",

supportedModalities: null,

credentials: llmCreds,

labels: null,

varVersion: null,

monitoringEndpoint: null,

capabilities: null,

defaultSamplingParams: null,

maxContextLength: null,

clientConfig: null,

ownerId: null,

llmId: null);

var llmResp = llmsApi.CreateLLM(llmReq);

var llmID = llmResp.Llm.LlmId;

Console.WriteLine($"LLM ID: {llmID}");The cURL commands above shall produce the content as follows in the print out:

LLM created successfully!

ID: [LLM_UUID]

Display Name: My GPT-5.1

Owner: [OWNER_UUID]

Provider Type: OPENAI

Endpoint URL: https://api.openai.com/v1

API Path: /chat/completions

Model: gpt-5.1

Modalities: TEXT

Capabilities: Chat, Completion, Functions, System Messages, Streaming

Created by: [CREATED_BY_UUID]

Created at: 2026-01-11T21:47:53Z

Capability Inference:

✓ Completion Support: true (detected from model family 'gpt-5')

✓ Function Calling: true (detected from model family 'gpt-5')

✓ System Messages: true (detected from model family 'gpt-5')

✓ Streaming: true (detected from model family 'gpt-5')

✓ Sampling Parameters: false (detected from model family 'gpt-5')LLM ID: [LLM_UUID]LLM ID: [LLM_UUID]LLM ID: [LLM_UUID]LLM ID: [LLM_UUID]LLM ID: [LLM_UUID]LLM ID: [LLM_UUID]Step 2: Add knowledge to a space

A RAG agent's knowledge comes from memories. A memory can correspond to a PDF document, an email, a Markdown file, and so on. Memories can be grouped into collections called spaces (a.k.a. corpora) so you can easily control what an agent can access. In GoodMem, an agent can tap into multiple spaces. In this tutorial, we will create one space.

The goal of this step is to bring the RAG agent to the following state, where knowledge is written into the space from sources:

┌──────────────────────────────────────┐

│ RAG Agent (under construction) │

┌───────────┐ │ ┌──────────┐ ┌─────┐ │

│ Knowledge ├─┼──────▶ Embedder │ │ LLM │ │

│ Source │ │ └────┬─────┘ └─────┘ │

└───────────┘ │ │ (write │

│ │ into) │

│ ┌────▼─────┐ │

│ │ Space │ │

│ └──────────┘ │

└──────────────────────────────────────┘Step 2.1: Create a space

A space needs to be associated with at least one embedder. GoodMem supports multi-embedder spaces; for example, you can use two embedders in parallel to benefit from both.

The commands below create a space with a single embedder.

The embedder ID is referenced by the environment variable $EMBEDDER_ID set in Step 1.1. The space ID is saved to the environment variable $SPACE_ID for later use.

goodmem space create \

--name "Goodmem test" \

--embedder-id ${EMBEDDER_ID} | tee /tmp/space_output.txt

export SPACE_ID=$(grep "^ID:" /tmp/space_output.txt | awk '{print $2}')

echo "Space ID: $SPACE_ID"curl -X POST "$GOODMEM_BASE_URL/v1/spaces" \

-H "x-api-key: $GOODMEM_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"name": "Goodmem test",

"spaceEmbedders": [

{

"embedderId": "'"$EMBEDDER_ID"'",

"defaultRetrievalWeight": "1.0"

}

]

}' | tee /tmp/space_response.json | jq

export SPACE_ID=$(jq -r '.spaceId' /tmp/space_response.json)

echo "Space ID: $SPACE_ID"# Create a space

response = requests.post(

f"{GOODMEM_BASE_URL}/v1/spaces",

headers={

"x-api-key": GOODMEM_API_KEY,

"Content-Type": "application/json"

},

json={

"name": "Goodmem test",

"spaceEmbedders": [

{

"embedderId": EMBEDDER_ID,

"defaultRetrievalWeight": "1.0"

}

]

}

)

response.raise_for_status()

space_response = response.json()

SPACE_ID = space_response["spaceId"]

print(f"Space ID: {SPACE_ID}")// Create a space (Go SDK requires two-arg NewSpaceCreationRequest: name + defaultChunkingConfig)

spaceEmbedder := goodmem.NewSpaceEmbedderConfig(embedderID)

spaceEmbedder.SetDefaultRetrievalWeight(1.0)

defaultChunking := goodmem.NewChunkingConfiguration()

defaultChunking.SetNone(map[string]interface{}{})

spaceReq := goodmem.NewSpaceCreationRequest("Goodmem test", *defaultChunking)

spaceReq.SetSpaceEmbedders([]goodmem.SpaceEmbedderConfig{*spaceEmbedder})

spaceResp, _, err := client.SpacesAPI.CreateSpace(ctx).

SpaceCreationRequest(*spaceReq).Execute()

if err != nil {

log.Fatal(err)

}

spaceID := spaceResp.GetSpaceId()

fmt.Printf("Space ID: %s\n", spaceID)SpaceEmbedderConfig spaceEmbedder = new SpaceEmbedderConfig();

spaceEmbedder.setEmbedderId(embedderID);

spaceEmbedder.setDefaultRetrievalWeight(1.0);

ChunkingConfiguration defaultChunking = new ChunkingConfiguration();

defaultChunking.setNone(new java.util.HashMap<>());

SpaceCreationRequest spaceReq = new SpaceCreationRequest();

spaceReq.setName("Goodmem test");

spaceReq.setSpaceEmbedders(java.util.List.of(spaceEmbedder));

spaceReq.setDefaultChunkingConfig(defaultChunking);

Space spaceResp = spacesApi.createSpace(spaceReq);

String spaceID = spaceResp.getSpaceId();

System.out.println("Space ID: " + spaceID);const spaceReq = {

name: 'Goodmem test',

spaceEmbedders: [

{ embedderId: EMBEDDER_ID, defaultRetrievalWeight: '1.0' }

],

defaultChunkingConfig: { none: {} }

};

const spaceResp = await spacesApi.createSpace(spaceReq);

const SPACE_ID = spaceResp.spaceId;

console.log('Space ID:', SPACE_ID);var spaceEmbedder = new SpaceEmbedderConfig(embedderID, 1.0);

var defaultChunking = new ChunkingConfiguration { None = new Dictionary<string, object>() };

var spaceReq = new SpaceCreationRequest(

name: "Goodmem test", labels: null,

spaceEmbedders: new List<SpaceEmbedderConfig> { spaceEmbedder },

publicRead: null, ownerId: null,

defaultChunkingConfig: defaultChunking, spaceId: null);

var spaceResp = spacesApi.CreateSpace(spaceReq);

var spaceID = spaceResp.SpaceId;

Console.WriteLine($"Space ID: {spaceID}");The cURL commands above shall produce the content as follows in the print out:

Space created successfully!

ID: [SPACE_UUID]

Name: Goodmem test

Owner: [OWNER_UUID]

Created by: [CREATED_BY_UUID]

Created at: 2026-01-11T21:47:57Z

Public: false

Embedder: [EMBEDDER_UUID] (weight: 1)Space ID: [SPACE_UUID] Space ID: [SPACE_UUID]Space ID: [SPACE_UUID]Space ID: [SPACE_UUID]Space ID: [SPACE_UUID]Space ID: [SPACE_UUID]Step 2.2: Ingest data into the space

A memory can be ingested from two types of sources:

- Plain text (for example, a conversation, a message, or a code snippet)

- Files (for example, PDFs, Word documents, and text files)

We'll show how to ingest both kinds of data into the space.

Step 2.2.1: Ingest plain text

The commands below ingest plain text (in field originalContent ) into a space (in field spaceId ) whose ID is set to the environment variable $SPACE_ID in Step 2.1. It saves the ingested memory ID to the environment variable $MEMORY_ID for later use.

goodmem memory create \

--space-id ${SPACE_ID} \

--content "Transformers are a type of neural network architecture that are particularly well-suited for natural language processing tasks. A Transformer model leverages the attention mechanism to capture long-range dependencies in the input sequence." \

--content-type "text/plain" | tee /tmp/memory_text_output.txt

export MEMORY_ID=$(grep "^ID:" /tmp/memory_text_output.txt | awk '{print $2}')

echo "Memory ID: $MEMORY_ID"curl -X POST "$GOODMEM_BASE_URL/v1/memories" \

-H "x-api-key: $GOODMEM_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"spaceId":"'"$SPACE_ID"'",

"originalContent": "'"Transformers are a type of neural network architecture that are particularly well-suited for natural language processing tasks. A Transformer model leverages the attention mechanism to capture long-range dependencies in the input sequence."'",

"contentType": "text/plain"

}' | tee /tmp/memory_text_response.json | jq

export MEMORY_ID=$(jq -r '.memoryId' /tmp/memory_text_response.json)

echo "Memory ID: $MEMORY_ID"

echo "Processing Status: $(jq -r '.processingStatus' /tmp/memory_text_response.json)"# Ingest plain text memory

response = requests.post(

f"{GOODMEM_BASE_URL}/v1/memories",

headers={

"x-api-key": GOODMEM_API_KEY,

"Content-Type": "application/json"

},

json={

"spaceId": SPACE_ID,

"originalContent": "Transformers are a type of neural network architecture that are particularly well-suited for natural language processing tasks. A Transformer model leverages the attention mechanism to capture long-range dependencies in the input sequence.",

"contentType": "text/plain"

}

)

response.raise_for_status()

memory_text_response = response.json()

MEMORY_ID = memory_text_response["memoryId"]

processing_status = memory_text_response["processingStatus"]

print(f"Memory ID: {MEMORY_ID}")

print(f"Processing Status: {processing_status}")memReq := goodmem.NewMemoryCreationRequest(spaceID, "text/plain")

memReq.SetOriginalContent("Transformers are a type of neural network architecture that are particularly well-suited for natural language processing tasks. A Transformer model leverages the attention mechanism to capture long-range dependencies in the input sequence.")

memResp, _, err := client.MemoriesAPI.CreateMemory(ctx).

MemoryCreationRequest(*memReq).Execute()

if err != nil {

log.Fatal(err)

}

memoryID := memResp.GetMemoryId()

processingStatus := memResp.GetProcessingStatus()

fmt.Printf("Memory ID: %s\n", memoryID)

fmt.Printf("Processing Status: %s\n", processingStatus)MemoryCreationRequest memReq = new MemoryCreationRequest();

memReq.setSpaceId(spaceID);

memReq.setContentType("text/plain");

memReq.setOriginalContent("Transformers are a type of neural network architecture that are particularly well-suited for natural language processing tasks. A Transformer model leverages the attention mechanism to capture long-range dependencies in the input sequence.");

Memory memResp = memoriesApi.createMemory(memReq);

String memoryID = memResp.getMemoryId();

String processingStatus = memResp.getProcessingStatus();

System.out.println("Memory ID: " + memoryID);

System.out.println("Processing Status: " + processingStatus);const memReq = {

spaceId: SPACE_ID,

contentType: 'text/plain',

originalContent: 'Transformers are a type of neural network architecture that are particularly well-suited for natural language processing tasks. A Transformer model leverages the attention mechanism to capture long-range dependencies in the input sequence.'

};

const memResp = await memoriesApi.createMemory(memReq);

let MEMORY_ID = memResp.memoryId;

const processing_status = memResp.processingStatus;

console.log('Memory ID:', MEMORY_ID);

console.log('Processing Status:', processing_status);var memReq = new MemoryCreationRequest(

memoryId: null,

spaceId: spaceID,

originalContent: "Transformers are a type of neural network architecture that are particularly well-suited for natural language processing tasks. A Transformer model leverages the attention mechanism to capture long-range dependencies in the input sequence.",

originalContentB64: null,

originalContentRef: null,

contentType: "text/plain",

metadata: null,

chunkingConfig: null);

var memResp = memoriesApi.CreateMemory(memReq);

var memoryID = memResp.MemoryId;

var processingStatus = memResp.ProcessingStatus;

Console.WriteLine($"Memory ID: {memoryID}");

Console.WriteLine($"Processing Status: {processingStatus}");The cURL commands above shall produce the content as follows in the print out.

Memory created successfully!

ID: [MEMORY_UUID]

Space ID: [SPACE_UUID]

Content Type: text/plain

Status: PENDING

Created by: [CREATED_BY_UUID]

Created at: 2026-01-11T21:48:19ZMemory ID: [MEMORY_UUID]

Processing Status: PENDINGMemory ID: [MEMORY_UUID]

Processing Status: PENDINGMemory ID: [MEMORY_UUID]

Processing Status: PENDINGMemory ID: [MEMORY_UUID]

Processing Status: PENDINGMemory ID: [MEMORY_UUID]

Processing Status: PENDINGMemory ID: [MEMORY_UUID]

Processing Status: PENDINGStep 2.2.2: Ingest a PDF with chunking

For this step, we'll use a sample PDF file. Download it with the following command, which saves it to your current directory.

wget https://raw.githubusercontent.com/PAIR-Systems-Inc/goodmem-samples/main/cookbook/1_Building_a_basic_RAG_Agent_with_GoodMem/sample_documents/employee_handbook.pdfA PDF file is usually text-heavy. If we treat it as one memory, such a long memory will be ineffective for retrieval and inefficient or even impossible (out of context window) for the embedder or LLM to process. This is where chunking comes in: it splits the lengthy text into smaller segments. We provide a guide to help you design a chunking strategy for your use case. We'll use a placeholder chunking strategy below.

The command below ingests the PDF file into a new memory in the space specified by the environment variable $SPACE_ID (created in Step 2.1). The resulting memory ID is saved to the environment variable $MEMORY_ID for later use.

goodmem memory create \

--space-id ${SPACE_ID} \

--file employee_handbook.pdf | tee /tmp/memory_pdf_output.txt

export MEMORY_ID=$(grep "^ID:" /tmp/memory_pdf_output.txt | awk '{print $2}')

echo "Memory ID: $MEMORY_ID"Chunking strategy not specified; GoodMem CLI defaults to recursive chunking.

REQUEST_JSON=$(jq -n \

--arg spaceId "$SPACE_ID" \

'{

spaceId: $spaceId,

contentType: "application/pdf",

chunkingConfig: {

recursive: {

chunkSize: 512,

chunkOverlap: 64,

keepStrategy: "KEEP_END",

lengthMeasurement: "CHARACTER_COUNT"

}

}

}')

curl -X POST "$GOODMEM_BASE_URL/v1/memories" \

-H "x-api-key: $GOODMEM_API_KEY" \

-F 'file=@employee_handbook.pdf' \

-F "request=$REQUEST_JSON" | tee /tmp/memory_response.json | jq

export MEMORY_ID=$(jq -r '.memoryId' /tmp/memory_response.json)

echo "Memory ID: $MEMORY_ID"

# Build the request JSON

request_data = {

"spaceId": SPACE_ID,

"contentType": "application/pdf",

"metadata": {"source": "FILE"},

"chunkingConfig": {

"recursive": {

"chunkSize": 512,

"chunkOverlap": 64,

"keepStrategy": "KEEP_END",

"lengthMeasurement": "CHARACTER_COUNT"

}

}

}

# Post multipart form data

response = requests.post(

f"{GOODMEM_BASE_URL}/v1/memories",

headers={'x-api-key': GOODMEM_API_KEY},

files={'file': open('employee_handbook.pdf', 'rb')},

data={'request': json.dumps(request_data, ensure_ascii=False)}

)

memory_response = response.json()

MEMORY_ID = memory_response['memoryId']

print(f"Memory ID: {MEMORY_ID}")// Read and base64-encode the PDF file

pdfData, err := os.ReadFile("employee_handbook.pdf")

if err != nil {

log.Fatal(err)

}

pdfB64 := base64.StdEncoding.EncodeToString(pdfData)

// Build the memory creation request with chunking

pdfMemReq := goodmem.NewMemoryCreationRequest(spaceID, "application/pdf")

pdfMemReq.SetOriginalContentB64(pdfB64)

pdfMemReq.SetMetadata(map[string]interface{}{"source": "FILE"})

chunkingConfig := goodmem.NewChunkingConfiguration()

chunkingConfig.SetRecursive(*goodmem.NewRecursiveChunkingConfiguration(

512, 64, goodmem.KEEP_END, goodmem.CHARACTER_COUNT,

))

pdfMemReq.SetChunkingConfig(*chunkingConfig)

pdfMemResp, _, err := client.MemoriesAPI.CreateMemory(ctx).

MemoryCreationRequest(*pdfMemReq).Execute()

if err != nil {

log.Fatal(err)

}

memoryID = pdfMemResp.GetMemoryId()

fmt.Printf("Memory ID: %s\n", memoryID)// Ingest PDF with chunking (read file, base64, MemoryCreationRequest with chunkingConfig)

byte[] pdfData = java.nio.file.Files.readAllBytes(java.nio.file.Paths.get("employee_handbook.pdf"));

String pdfB64 = java.util.Base64.getEncoder().encodeToString(pdfData);

MemoryCreationRequest pdfMemReq = new MemoryCreationRequest();

pdfMemReq.setSpaceId(spaceID);

pdfMemReq.setContentType("application/pdf");

pdfMemReq.setOriginalContentB64(pdfB64);

pdfMemReq.setMetadata(new java.util.HashMap<>(java.util.Map.of("source", "FILE")));

ChunkingConfiguration chunkingConfig = new ChunkingConfiguration();

RecursiveChunkingConfiguration recursive = new RecursiveChunkingConfiguration();

recursive.setChunkSize(512);

recursive.setChunkOverlap(64);

recursive.setKeepStrategy(SeparatorKeepStrategy.KEEP_END);

recursive.setLengthMeasurement(LengthMeasurement.CHARACTER_COUNT);

chunkingConfig.setRecursive(recursive);

pdfMemReq.setChunkingConfig(chunkingConfig);

Memory pdfMemResp = memoriesApi.createMemory(pdfMemReq);

String pdfMemoryID = pdfMemResp.getMemoryId();

System.out.println("Memory ID: " + pdfMemoryID);// Ingest PDF with chunking (Node.js: use fs to read file and base64)

const fs = require('fs');

const pdfData = fs.readFileSync('employee_handbook.pdf');

const pdfB64 = pdfData.toString('base64');

const pdfMemReq = {

spaceId: SPACE_ID,

contentType: 'application/pdf',

originalContentB64: pdfB64,

metadata: { source: 'FILE' },

chunkingConfig: {

recursive: {

chunkSize: 512,

chunkOverlap: 64,

keepStrategy: 'KEEP_END',

lengthMeasurement: 'CHARACTER_COUNT'

}

}

};

const pdfMemResp = await memoriesApi.createMemory(pdfMemReq);

MEMORY_ID = pdfMemResp.memoryId;

console.log('Memory ID:', MEMORY_ID);// Ingest PDF with chunking

var pdfData = File.ReadAllBytes("employee_handbook.pdf");

var pdfB64 = Convert.ToBase64String(pdfData);

var chunkingConfig = new ChunkingConfiguration

{

Recursive = new RecursiveChunkingConfiguration

{

ChunkSize = 512,

ChunkOverlap = 64,

KeepStrategy = SeparatorKeepStrategy.KEEPEND,

LengthMeasurement = LengthMeasurement.CHARACTERCOUNT

}

};

var pdfMemReq = new MemoryCreationRequest(

memoryId: null,

spaceId: spaceID,

originalContent: null,

originalContentB64: pdfB64,

originalContentRef: null,

contentType: "application/pdf",

metadata: new Dictionary<string, object> { ["source"] = "FILE" },

chunkingConfig: chunkingConfig);

var pdfMemResp = memoriesApi.CreateMemory(pdfMemReq);

memoryID = pdfMemResp.MemoryId;

Console.WriteLine($"Memory ID: {memoryID}");The cURL commands above shall produce the content as follows in the print out.

Memory created successfully!

ID: [MEMORY_UUID]

Space ID: [SPACE_UUID]

Content Type: application/pdf

Status: PENDING

Created by: [CREATED_BY_UUID]

Created at: 2026-01-11T21:48:19Z

Metadata:

filename: employee_handbook.pdfMemory ID: [MEMORY_UUID]Memory ID: [MEMORY_UUID]Memory ID: [MEMORY_UUID]Memory ID: [MEMORY_UUID]Memory ID: [MEMORY_UUID]Memory ID: [MEMORY_UUID]You can then check the status as you did in Step 2.2.1 for the plain-text memory.

Step 3: Run the RAG agent

The goal of this step is to bring the RAG agent to the following operational state:

┌─────────────────────────────────────┐

| |

| ┌──────────────────────────┼────────┐

┌──────┴────┐ | | |

│ User │ │ ┌──────────┐ ┌──▼──┐ │ ┌──────────┐

│ Query ├─────┼────▶ Embedder │ │ LLM ├─────┼─────▶ Response │

└───────────┘ │ └────┬─────┘ └──▲──┘ │ └──────────┘

│ │ | │

│ | | │

│ ┌────▼─────┐ ┌───────────┐ │

│ │ Space ├────▶ Retrieved │ │

│ └──────────┘ │ Knowledge │ │

│ └───────────┘ │

└───────────────────────────────────┘

RAG Agent in actionNow let's ask the question "What do you know about AI?" against the knowledge in the space $SPACE_ID (created in Step 2.1).

The LLM used in this agent is specified by the environment variable $LLM_ID ID (registered in Step 1.2).

If you run all steps in one script, wait -- usually a few seconds -- until each ingested memory's processingStatus is COMPLETED (e.g. by polling getMemory) before the RAG agent below; otherwise the RAG agent may not find indexed chunks.

goodmem memory retrieve \

--space-id ${SPACE_ID} \

--post-processor-args '{"llm_id": "'"${LLM_ID}"'"}' \

"What do you know about AI?"curl -X POST "$GOODMEM_BASE_URL/v1/memories:retrieve" \

-H "x-api-key: $GOODMEM_API_KEY" \

-H "Content-Type: application/json" \

-H "Accept: application/x-ndjson" \

-d '{

"message": "What do you know about AI?",

"spaceKeys": [

{

"spaceId": "'"$SPACE_ID"'"

}

],

"postProcessor": {

"name": "com.goodmem.retrieval.postprocess.ChatPostProcessorFactory",

"config": {

"llm_id": "'"$LLM_ID"'"

}

}

}'# Spin the RAG agent

response = requests.post(

f"{GOODMEM_BASE_URL}/v1/memories:retrieve",

headers={

"x-api-key": GOODMEM_API_KEY,

"Content-Type": "application/json",

"Accept": "application/x-ndjson"

},

json={

"message": "What do you know about AI?",

"spaceKeys": [

{

"spaceId": SPACE_ID

}

],

"postProcessor": {

"name": "com.goodmem.retrieval.postprocess.ChatPostProcessorFactory",

"config": {

"llm_id": LLM_ID

}

}

}

)

response.raise_for_status()

# Process NDJSON response (application/x-ndjson)

for line in response.text.strip().split('\n'):

if line:

print(json.dumps(json.loads(line), indent=2, ensure_ascii=False))The variables SPACE_ID and LLM_ID were defined in previous steps.

stream, err := streamClient.RetrieveMemoryStreamAdvanced(ctx,

&goodmem.AdvancedMemoryStreamRequest{

Message: "What do you know about AI?",

SpaceIDs: []string{spaceID},

Format: goodmem.FormatNDJSON,

PostProcessorName: "com.goodmem.retrieval.postprocess.ChatPostProcessorFactory",

PostProcessorConfig: map[string]interface{}{

"llm_id": llmID,

},

})

if err != nil {

log.Fatal(err)

}

// Process streaming response events

for event := range stream {

respJSON, _ := json.MarshalIndent(event, "", " ")

fmt.Println(string(jsonUnescapeUnicode(respJSON)))

}The variables spaceID and llmID were defined in previous steps.

// Spin the RAG agent (StreamingClient.retrieveMemoryStreamAdvanced; two-arg constructor; method must declare throws StreamingClient.StreamError — see setup)

StreamingClient.AdvancedMemoryStreamRequest req = new StreamingClient.AdvancedMemoryStreamRequest("What do you know about AI?", java.util.List.of(spaceID));

req.setFormat(StreamingClient.StreamingFormat.NDJSON);

req.setPostProcessorName("com.goodmem.retrieval.postprocess.ChatPostProcessorFactory");

req.setPostProcessorConfig(new java.util.HashMap<>(java.util.Map.of("llm_id", llmID)));

java.util.stream.Stream<StreamingClient.MemoryStreamResponse> stream = streamClient.retrieveMemoryStreamAdvanced(req);

stream.forEach(event -> System.out.println(jsonUnescapeUnicode(GSON.toJson(event))));The variables spaceID and llmID were defined in previous steps. The method that calls retrieveMemoryStreamAdvanced must declare throws StreamingClient.StreamError (or catch it).

const controller = new AbortController();

const request = {

message: 'What do you know about AI?',

spaceIds: [SPACE_ID],

format: 'ndjson',

postProcessorName: 'com.goodmem.retrieval.postprocess.ChatPostProcessorFactory',

postProcessorConfig: { llm_id: LLM_ID }

};

const stream = await streamingClient.retrieveMemoryStreamAdvanced(controller.signal, request);

for await (const event of stream) {

console.log(JSON.stringify(event, null, 2));

}The variables SPACE_ID and LLM_ID were defined in previous steps.

// Spin the RAG agent (use UnsafeRelaxedJsonEscaping to display Unicode characters correctly)

using System.Text.Encodings.Web;

using System.Text.Json;

var request = new AdvancedMemoryStreamRequest

{

Message = "What do you know about AI?",

SpaceIds = new List<string> { spaceID },

Format = "ndjson",

PostProcessorName = "com.goodmem.retrieval.postprocess.ChatPostProcessorFactory",

PostProcessorConfig = new Dictionary<string, object> { ["llm_id"] = llmID }

};

var jsonOptions = new JsonSerializerOptions

{

WriteIndented = true,

Encoder = JavaScriptEncoder.UnsafeRelaxedJsonEscaping

};

await foreach (var evt in streamingClient.RetrieveMemoryStreamAdvancedAsync(request))

{

Console.WriteLine(JsonSerializer.Serialize(evt, jsonOptions));

}The variables spaceID and llmID were defined in previous steps.

An agent in GoodMem is like a lambda function in programming. You do not have to define a named agent. Instead, you compose an agent on the fly by specifying the spaces that supply knowledge and the LLM that turns retrieved knowledge into a response. We call this dynamic composition the Lambda Agent.

If it executes successfully, you'll get a very verbose response. Within it, you'll see a segment like this that summarizes the retrieved knowledge:

┌─ Abstract (relevance: 0.00)

│

│ From the retrieved data, one specific thing I know about AI is a key

│ architecture called the Transformer [20]. A Transformer is a type of neural

│ network that is particularly well‑suited for natural language

│ processing tasks. It uses an attention mechanism, which allows the model

│ to capture long‑range dependencies within an input sequence (for

│ example, words that are far apart in a sentence but still related).

└─{

"abstractReply": {

"text": "From the retrieved data, the only direct information about AI is a short description of Transformer models [20]. It states that Transformers are a type of neural network architecture that are particularly well‑suited for natural language processing tasks [20]. A Transformer model uses an attention mechanism, which allows it to capture long‑range dependencies in an input sequence (for example, relationships between words that are far apart in a sentence) [20]. The rest of the retrieved content concerns organizational policies and employment information and does not address AI specifically."

}

}{

"abstractReply": {

"text": "From the retrieved data, the only direct information about AI is a short description of Transformer models [20]. It states that Transformers are a type of neural network architecture that are particularly well‑suited for natural language processing tasks [20]. A Transformer model uses an attention mechanism, which allows it to capture long‑range dependencies in an input sequence (for example, relationships between words that are far apart in a sentence) [20]. The rest of the retrieved content concerns organizational policies and employment information and does not address AI specifically."

}

}{

"abstractReply": {

"text": "From the retrieved data, the only direct information about AI is a short description of Transformer models [20]. It states that Transformers are a type of neural network architecture that are particularly well‑suited for natural language processing tasks [20]. A Transformer model uses an attention mechanism, which allows it to capture long‑range dependencies in an input sequence (for example, relationships between words that are far apart in a sentence) [20]. The rest of the retrieved content concerns organizational policies and employment information and does not address AI specifically."

}

}{

"abstractReply": {

"text": "From the retrieved data, the only direct information about AI is a short description of Transformer models [20]. It states that Transformers are a type of neural network architecture that are particularly well‑suited for natural language processing tasks [20]. A Transformer model uses an attention mechanism, which allows it to capture long‑range dependencies in an input sequence (for example, relationships between words that are far apart in a sentence) [20]. The rest of the retrieved content concerns organizational policies and employment information and does not address AI specifically."

}

}{

"abstractReply": {

"text": "From the retrieved data, the only direct information about AI is a short description of Transformer models [20]. It states that Transformers are a type of neural network architecture that are particularly well‑suited for natural language processing tasks [20]. A Transformer model uses an attention mechanism, which allows it to capture long‑range dependencies in an input sequence (for example, relationships between words that are far apart in a sentence) [20]. The rest of the retrieved content concerns organizational policies and employment information and does not address AI specifically."

}

}{

"abstractReply": {

"text": "From the retrieved data, the only direct information about AI is a short description of Transformer models [20]. It states that Transformers are a type of neural network architecture that are particularly well‑suited for natural language processing tasks [20]. A Transformer model uses an attention mechanism, which allows it to capture long‑range dependencies in the input sequence (for example, relationships between words that are far apart in a sentence) [20]. The rest of the retrieved content concerns organizational policies and employment information and does not address AI specifically."

}

}Do you recall what we ingested into the space? It's a text snippet about Transformers (Step 2.2.1) and a boilerplate employee handbook (Step 2.2.2). The response clearly states that it's based on the Transformers memory and rejects the employee handbook as not about AI. Makes sense, right?

In particular, the response includes citations, e.g., [20]. With these citations, your agent can point back to or highlight the relevant part of the original document.

Step 4 (optional): Add a reranker

The RAG agent above uses the embedders associated with the space(s) to retrieve knowledge. This retrieval uses the dot product, which is fast but can be less accurate. A reranker can greatly improve accuracy using a more sophisticated cross-encoder approach.

Step 4.1: Register a reranker

Since OpenAI doesn't offer a reranker, we'll use one from Voyage AI. First, obtain a Voyage AI API key and set it as an environment variable:

export VOYAGE_API_KEY="your_voyage_api_key"Registering a reranker in GoodMem is similar to registering an embedder or LLM. The commands below register the Voyage AI rerank-2.5 reranker in GoodMem. The reranker ID is saved to the environment variable $RERANKER_ID for later use.

goodmem reranker create \

--display-name "Voyage rerank-2.5" \

--provider-type VOYAGE \

--endpoint-url "https://api.voyageai.com" \

--api-path "/v1/rerank" \

--model-identifier "rerank-2.5" \

--cred-api-key ${VOYAGE_API_KEY} | tee /tmp/reranker_output.txt

export RERANKER_ID=$(grep "^ID:" /tmp/reranker_output.txt | awk '{print $2}')

echo "Reranker ID: $RERANKER_ID"curl -X POST "$GOODMEM_BASE_URL/v1/rerankers" \

-H "Content-Type: application/json" \

-H "x-api-key: $GOODMEM_API_KEY" \

-d '{

"displayName": "Voyage rerank-2.5",

"providerType": "VOYAGE",

"endpointUrl": "https://api.voyageai.com/v1",

"modelIdentifier": "rerank-2.5",

"credentials": {

"kind": "CREDENTIAL_KIND_API_KEY",

"apiKey": {

"inlineSecret": "'"$VOYAGE_API_KEY"'"

}

}

}' | tee /tmp/reranker_response.json | jq

export RERANKER_ID=$(jq -r '.rerankerId' /tmp/reranker_response.json)

echo "Reranker ID: $RERANKER_ID"# Set up Voyage API key

VOYAGE_API_KEY = os.getenv("VOYAGE_API_KEY")

# Register the reranker

response = requests.post(

f"{GOODMEM_BASE_URL}/v1/rerankers",

headers={

"Content-Type": "application/json",

"x-api-key": GOODMEM_API_KEY

},

json={

"displayName": "Voyage rerank-2.5",

"providerType": "VOYAGE",

"endpointUrl": "https://api.voyageai.com/v1",

"modelIdentifier": "rerank-2.5",

"credentials": {

"kind": "CREDENTIAL_KIND_API_KEY",

"apiKey": {

"inlineSecret": VOYAGE_API_KEY

}

}

}

)

response.raise_for_status()

reranker_response = response.json()

RERANKER_ID = reranker_response["rerankerId"]

print(f"Reranker ID: {RERANKER_ID}")// Set up Voyage API key

voyageAPIKey := os.Getenv("VOYAGE_API_KEY")

voyageKeyAuth := goodmem.NewApiKeyAuth()

voyageKeyAuth.SetInlineSecret(voyageAPIKey)

voyageCreds := goodmem.NewEndpointAuthentication(goodmem.CREDENTIAL_KIND_API_KEY)

voyageCreds.SetApiKey(*voyageKeyAuth)

rerankerReq := goodmem.NewRerankerCreationRequest(

"Voyage rerank-2.5",

goodmem.VOYAGE,

"https://api.voyageai.com/v1",

"rerank-2.5",

)

rerankerReq.SetCredentials(*voyageCreds)

rerankerResp, _, err := client.RerankersAPI.CreateReranker(ctx).

RerankerCreationRequest(*rerankerReq).Execute()

if err != nil {

log.Fatal(err)

}

rerankerID := rerankerResp.GetRerankerId()

fmt.Printf("Reranker ID: %s\n", rerankerID)String voyageAPIKey = System.getenv("VOYAGE_API_KEY");

ai.pairsys.goodmem.client.model.ApiKeyAuth voyageKeyAuth = new ai.pairsys.goodmem.client.model.ApiKeyAuth();

voyageKeyAuth.setInlineSecret(voyageAPIKey);

EndpointAuthentication voyageCreds = new EndpointAuthentication();

voyageCreds.setKind(CredentialKind.CREDENTIAL_KIND_API_KEY);

voyageCreds.setApiKey(voyageKeyAuth);

RerankerCreationRequest rerankerReq = new RerankerCreationRequest();

rerankerReq.setDisplayName("Voyage rerank-2.5");

rerankerReq.setProviderType(ProviderType.VOYAGE);

rerankerReq.setEndpointUrl("https://api.voyageai.com/v1");

rerankerReq.setModelIdentifier("rerank-2.5");

rerankerReq.setCredentials(voyageCreds);

RerankerResponse rerankerResp = rerankersApi.createReranker(rerankerReq);

String rerankerID = rerankerResp.getRerankerId();

System.out.println("Reranker ID: " + rerankerID);const VOYAGE_API_KEY = process.env.VOYAGE_API_KEY;

const rerankerReq = {

displayName: 'Voyage rerank-2.5',

providerType: 'VOYAGE',

endpointUrl: 'https://api.voyageai.com/v1',

modelIdentifier: 'rerank-2.5',

credentials: {

kind: 'CREDENTIAL_KIND_API_KEY',

apiKey: { inlineSecret: VOYAGE_API_KEY }

}

};

const rerankerResp = await rerankersApi.createReranker(rerankerReq);

const RERANKER_ID = rerankerResp.rerankerId;

console.log('Reranker ID:', RERANKER_ID);var voyageAPIKey = Environment.GetEnvironmentVariable("VOYAGE_API_KEY");

var voyageKeyAuth = new ApiKeyAuth { InlineSecret = voyageAPIKey };

var voyageCreds = new EndpointAuthentication

{

Kind = CredentialKind.CREDENTIALKINDAPIKEY,

ApiKey = voyageKeyAuth

};

var rerankerReq = new RerankerCreationRequest(

displayName: "Voyage rerank-2.5",

description: null,

providerType: ProviderType.VOYAGE,

endpointUrl: "https://api.voyageai.com/v1",

apiPath: null,

modelIdentifier: "rerank-2.5",

supportedModalities: null,

credentials: voyageCreds,

labels: null,

varVersion: null,

monitoringEndpoint: null,

ownerId: null,

rerankerId: null);

var rerankerResp = rerankersApi.CreateReranker(rerankerReq);

var rerankerID = rerankerResp.RerankerId;

Console.WriteLine($"Reranker ID: {rerankerID}");The cURL commands above shall produce the content as follows in the print out.

Reranker created successfully!

ID: [RERANKER_UUID]

Display Name: Voyage rerank-2.5

Owner: [OWNER_UUID]

Provider Type: VOYAGE

Endpoint URL: https://api.voyageai.com

API Path: /v1/rerank

Model: rerank-2.5

Created: 2026-01-11T13:48:38-08:00

Updated: 2026-01-11T13:48:38-08:00Reranker ID: [RERANKER_UUID]Reranker ID: [RERANKER_UUID]Reranker ID: [RERANKER_UUID]Reranker ID: [RERANKER_UUID]Reranker ID: [RERANKER_UUID]Reranker ID: [RERANKER_UUID]Step 4.2: Use the reranker in the agent

To use the reranker in RAG, expand the Step 3 call with the reranker ID:

goodmem memory retrieve \

--space-id ${SPACE_ID} \

--post-processor-args '{"llm_id": "'"${LLM_ID}"'", "reranker_id": "'"${RERANKER_ID}"'"}' \

"What do you know about AI?"curl -X POST "$GOODMEM_BASE_URL/v1/memories:retrieve" \

-H "x-api-key: $GOODMEM_API_KEY" \

-H "Content-Type: application/json" \

-H "Accept: application/x-ndjson" \

-d '{

"message": "What do you know about AI?",

"spaceKeys": [

{

"spaceId": "'"$SPACE_ID"'"

}

],

"postProcessor": {

"name": "com.goodmem.retrieval.postprocess.ChatPostProcessorFactory",

"config": {

"llm_id": "'"$LLM_ID"'",

"reranker_id": "'"$RERANKER_ID"'" # New line, compared to the RAG agent call in Step 3.

}

}

}'# Spin the RAG agent with reranker

response = requests.post(

f"{GOODMEM_BASE_URL}/v1/memories:retrieve",

headers={

"x-api-key": GOODMEM_API_KEY,

"Content-Type": "application/json",

"Accept": "application/x-ndjson"

},

json={

"message": "What do you know about AI?",

"spaceKeys": [

{

"spaceId": SPACE_ID

}

],

"postProcessor": {

"name": "com.goodmem.retrieval.postprocess.ChatPostProcessorFactory",

"config": {

"llm_id": LLM_ID,

"reranker_id": RERANKER_ID # New line, compared to the RAG agent call in Step 3

}

}

}

)

response.raise_for_status()

# Process NDJSON response (application/x-ndjson)

for line in response.text.strip().split('\n'):

if line:

print(json.dumps(json.loads(line), indent=2, ensure_ascii=False))stream, err = streamClient.RetrieveMemoryStreamAdvanced(ctx,

&goodmem.AdvancedMemoryStreamRequest{

Message: "What do you know about AI?",

SpaceIDs: []string{spaceID},

Format: goodmem.FormatNDJSON,

PostProcessorName: "com.goodmem.retrieval.postprocess.ChatPostProcessorFactory",

PostProcessorConfig: map[string]interface{}{

"llm_id": llmID,

"reranker_id": rerankerID, // New line, compared to the RAG agent call in Step 3

},

})

if err != nil {

log.Fatal(err)

}

// Process streaming response events

for event := range stream {

respJSON, _ := json.MarshalIndent(event, "", " ")

fmt.Println(string(jsonUnescapeUnicode(respJSON)))

}// Spin the RAG agent with reranker (two-arg constructor; method must declare throws StreamingClient.StreamError)

StreamingClient.AdvancedMemoryStreamRequest req = new StreamingClient.AdvancedMemoryStreamRequest("What do you know about AI?", java.util.List.of(spaceID));

req.setFormat(StreamingClient.StreamingFormat.NDJSON);

req.setPostProcessorName("com.goodmem.retrieval.postprocess.ChatPostProcessorFactory");

java.util.Map<String, Object> config = new java.util.HashMap<>();

config.put("llm_id", llmID);

config.put("reranker_id", rerankerID);

req.setPostProcessorConfig(config);

java.util.stream.Stream<StreamingClient.MemoryStreamResponse> stream = streamClient.retrieveMemoryStreamAdvanced(req);

stream.forEach(event -> System.out.println(jsonUnescapeUnicode(GSON.toJson(event))));const controller = new AbortController();

const request = {

message: 'What do you know about AI?',

spaceIds: [SPACE_ID],

format: 'ndjson',

postProcessorName: 'com.goodmem.retrieval.postprocess.ChatPostProcessorFactory',

postProcessorConfig: { llm_id: LLM_ID, reranker_id: RERANKER_ID }

};

const stream = await streamingClient.retrieveMemoryStreamAdvanced(controller.signal, request);

for await (const event of stream) {

console.log(JSON.stringify(event, null, 2));

}// Spin the RAG agent with reranker (use UnsafeRelaxedJsonEscaping to display Unicode characters correctly)

using System.Text.Encodings.Web;

using System.Text.Json;

var request = new AdvancedMemoryStreamRequest

{

Message = "What do you know about AI?",

SpaceIds = new List<string> { spaceID },

Format = "ndjson",

PostProcessorName = "com.goodmem.retrieval.postprocess.ChatPostProcessorFactory",

PostProcessorConfig = new Dictionary<string, object>

{

["llm_id"] = llmID,

["reranker_id"] = rerankerID

}

};

var jsonOptions = new JsonSerializerOptions

{

WriteIndented = true,

Encoder = JavaScriptEncoder.UnsafeRelaxedJsonEscaping

};

await foreach (var evt in streamingClient.RetrieveMemoryStreamAdvancedAsync(request))

{

Console.WriteLine(JsonSerializer.Serialize(evt, jsonOptions));

}Congratulations! You now have a RAG agent that uses three core components of a RAG stack: an embedder, an LLM, and a reranker.

Why GoodMem

In this tutorial, we build a RAG agent with much less code than in other RAG frameworks. You only declare what you need: which embedder/LLM to use and what to add to memories. The rest is handled by GoodMem. This simplicity doesn't come at the expense of flexibility: you can create a space with more than one embedder, and a RAG agent can use more than one space. You can even gate space access by user role.

Beyond efficient building, GoodMem gives you deployment flexibility without vendor lock-in. You can deploy on-premise, air-gapped, or on any cloud provider you pick (GCP, Railway, Fly.io, etc.). You control your data sovereignty. You won't be locked out of your data. GoodMem has enterprise-grade security (encryption, RBAC) and compliance (SOC 2, FIPS, HIPAA) features built in. It is natively multimodal (paid feature), so no more OCR.

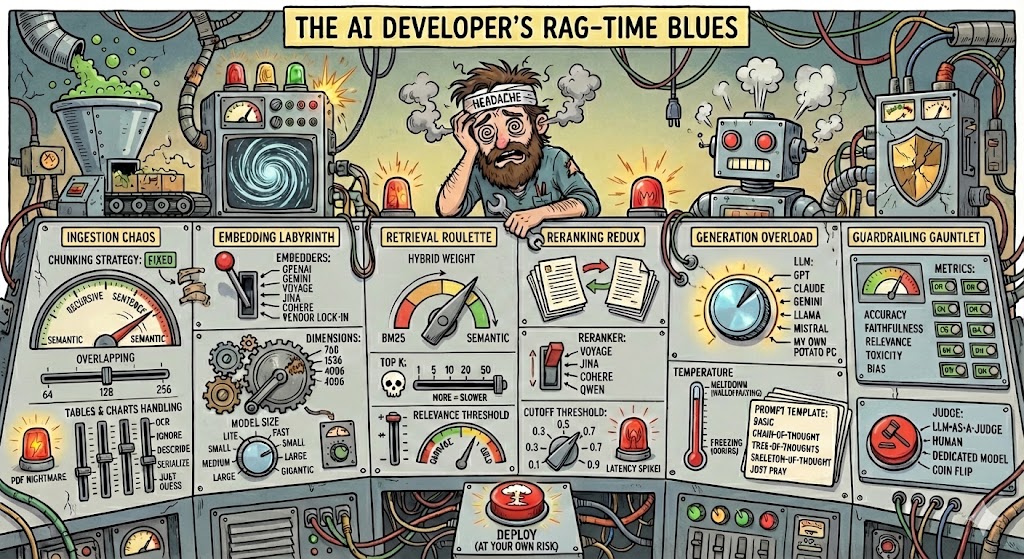

Max out RAG performance with GoodMem Tuner

The tutorial above gives you a working RAG agent. But in the real world, you want a RAG stack that not only works but also works well. You want the highest accuracy at the lowest latency and cost, using everything at your disposal.

That means a large number of tweaks and knobs. For the LLM alone, here are some questions to ask:

- Should I use a model from the Gemini, GPT, or Qwen series?

- If I use the GPT series, is my use case simple enough to warrant GPT-5-mini or even GPT-5-nano, which are cheaper and faster than GPT-5?

- Should I turn on thinking/reasoning mode? If so, what token budget or level (high, low, or default) should I use?

- When is it worth fine-tuning the LLM?

- How should I set the prompt so the LLM gives me a direct answer without rambling?

This is a rabbit hole.

This is where GoodMem Tuner comes in. It adjusts the knobs to find the best configuration for your use case. It doesn't tune just once; it tunes throughout the lifetime of your RAG agent. Think of it as lifetime fine-tuning. It can work with or without user feedback signals.

GoodMem Tuner is a paid feature. Contact us at hello@pairsys.ai for details.